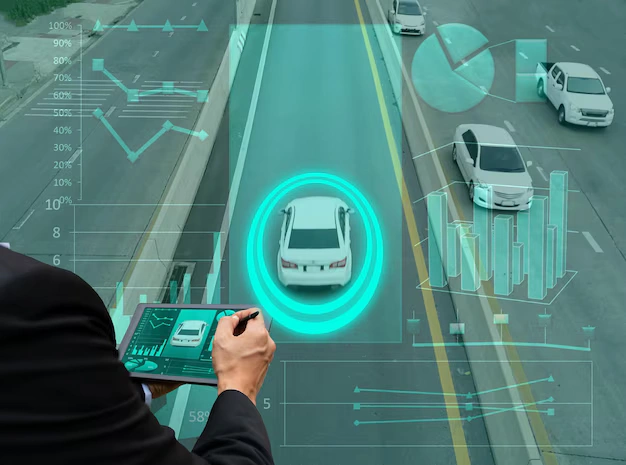

Self-driving cars, also known as autonomous vehicles (AVs), have fascinated engineers, futurists and the general public. The notion of vehicles that would be able to drive themselves with little or no human involvement is game-changing for transportation. Thanks to advances in artificial intelligence (AI), machine learning and sensor technology, self-driving cars have gone from science fiction to a rapidly developing reality. But achieving complete autonomy where vehicles can safely and effectively navigate all environments without human supervision poses many challenges.

In this post, we’ll review what self-driving cars actually are, how they are classified in levels of automation and what are the huge obstacles towards achieving full automation.

What Are Self-Driving Cars?

Self-driving cars describe cars that navigate the road and make driving decisions without human involvement by using a combination of sensors, cameras, radar, and artificial intelligence algorithms. [Sidenote: And this includes these: cars with systems intended to minimize a human driver being required to operate a car, using some combination of cameras, lidar and radar to manage acceleration, braking, steering and other driving functions.

While we have not yet fully autonomous cars on the road, they are progressing through a range of levels, each with its own field of operations. According to the Society of Automotive Engineers (SAE), there are six levels of vehicle automation from Level 0 (no automation) to Level 5 (full automation). The higher the level, the greater the degree the vehicle can undertake tasks without human intervention.

Levels of Automation

- Level 0 (No Automation): Driver is fully accountable for all functions.

- Level 1 (Driver Assistance) Basic support, such as cruise control or steering assistance, but the driver is still in full control.

- Level 2 (Partial Automation): The vehicle is able to control both steering and quickening or slowing concurrently, but the human driver must remain engaged and monitor the driving environment at all times.

- Level 3 (Conditional Automation): The vehicle can perform all tasks in selected situations (e.g., highway driving), but the driver must be prepared to intervene at any time.

- Level 4 (High Automation): The car can drive itself most of the time (e.g., in a certain city and/or certain weather conditions), but can only do so in a limited operational design domain.

- Level 5 (Fully Autonomous): No human input needed — the vehicle operates in any environment, under any circumstance.

Most automation today is at Levels 2 or 3, though many manufacturers, Tesla and Waymo to name just two, are striving to achieve Level 4 and 5 automations. However, many obstacles must be cleared before fully autonomous cars are a common sight on US roads.

So, what does it takes to achieve full automation?

The prospect of self-driving cars is enticing, but full automation is far away due to a number of technical, regulatory, social, and ethical considerations. These challenges will vary in nature across the spectrum of development, but will require close cooperation between automotive manufacturers, technology companies and regulators and policymakers to overcome them. Here are the main obstacles:

Technical Challenges

- AI and Decision Analysis: These are responsible for interpreting the data from sensors and making decisions in real time. The capacity for split-second decision making in dynamic, unpredictable scenarios is extremely difficult. A car without a driver needs to be able to understand pedestrians, cyclists, animals, other vehicles, and other fine road markings under different conditions. It must also make decisions informed by complicated ethical dilemmas, like how to respond in the event of an accident.

- Complexity of Urban Environments: Autonomous vehicles must be capable of safely traversing a multitude of environments including quiet suburban roads to busy city streets with dense traffic and pedestrians. City streets often have unpredictable variables — including construction zones, blocked streets and unpredictable human driving behavior — that are particularly difficult for an autonomous system to contend with.

- Human Error vs. Machine Error: One of the biggest selling points of self-driving cars is that they could cut down on traffic accidents caused by human error. But there have been testing accidents involving self-driving cars, which have raised concerns on the reliability of autonomous systems. Such incidents often get close scrutiny, particularly when anyone is hurt or killed. Only as autonomous vehicles actually demonstrate a consistent safety record superior to human-driven cars will public confidence in self-driving technology grow.

- Failure Modes and Alternatives: Autonomous systems should be designed to handle possible points of failure, ensuring that if one part of the system fails (such as a sensor or processing unit), the remaining components can maintain operation in a safe mode. In other words, if a sensor fails, the car should be able to fall back on another sensor or system to operate safely. That kind of reliability for a motorist potentially barreling down the highway at 65 mph is crucial for widespread adoption.

Infrastructure and Regulation

Poor Roadway Infrastructure: We need roads and infrastructure that facilitate autonomous technology for self-driving cars. Most existing roads and traffic systems weren’t designed for AVs and don’t have smart traffic lights or markings on the road, for example. Far-reaching use of autonomous vehicles without great infrastructure is likely to require substantial infrastructure upgrades.

Government Regulation: Regulators across the globe must set and enforce guidelines for the creation and use of autonomous vehicles. The rules should cover such issues as safety standards, testing protocols, road usage and liability. Regulations may differ from country to country or region to region, making it challenging when deploying autonomous vehicles globally.

Public Acceptance and Trust

Human Drivers and Mixed Traffic: Fully autonomous vehicles are still being developed, but the truth is that for many years, human-driven and self-driving vehicles will be on the roads together. This makes it hard for self-driving cars to expect and respond to the often changeable actions of human drivers. For example, how an artificially intelligent vehicle would treat a human driver who does not obey the traffic rules and behaves unexpectedly?

Consumer skepticism: Even once the technology is mature, there is likely to be skepticism among consumers towards self-driving cars. People might feel more secure in human-driven vehicles, especially when the perception is that autonomous systems might fail or make an unsafe decision. Gaining this trust will not be immediate, and will need extensive trials combined with transparency and clear outlines of the safety and benefits of autonomous vehicles.

Conclusion

One of the most exciting innovations in transportation has to be self-driving cars. They could also decrease accidents, enable smoother flowing traffic, reduce emissions, and potentially provide greater access to transportation. But the obstacles to achieving complete automation are daunting. The path to achieving truly autonomous vehicles is fraught with technical, ethical, and regulatory challenges, many of which are still being explored and addressed from sensor reliability to decision-making algorithms and policy issues, all of which require deliberation.

Level 5 automation, where vehicles can safely navigate any environment independently of a human, remains a work in progress, all the while, various milestones are being achieved. Given time and technological advancement, along with new solutions to these challenges, self-driving cars could become a standard and safe form of transportation. But for now, full automation is still a matter of great consideration, collaboration, and innovation.